-

Notifications

You must be signed in to change notification settings - Fork 6

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Knowledge Distillation Meets Self-Supervision & Prime-Aware Adaptive Distillation #75

Comments

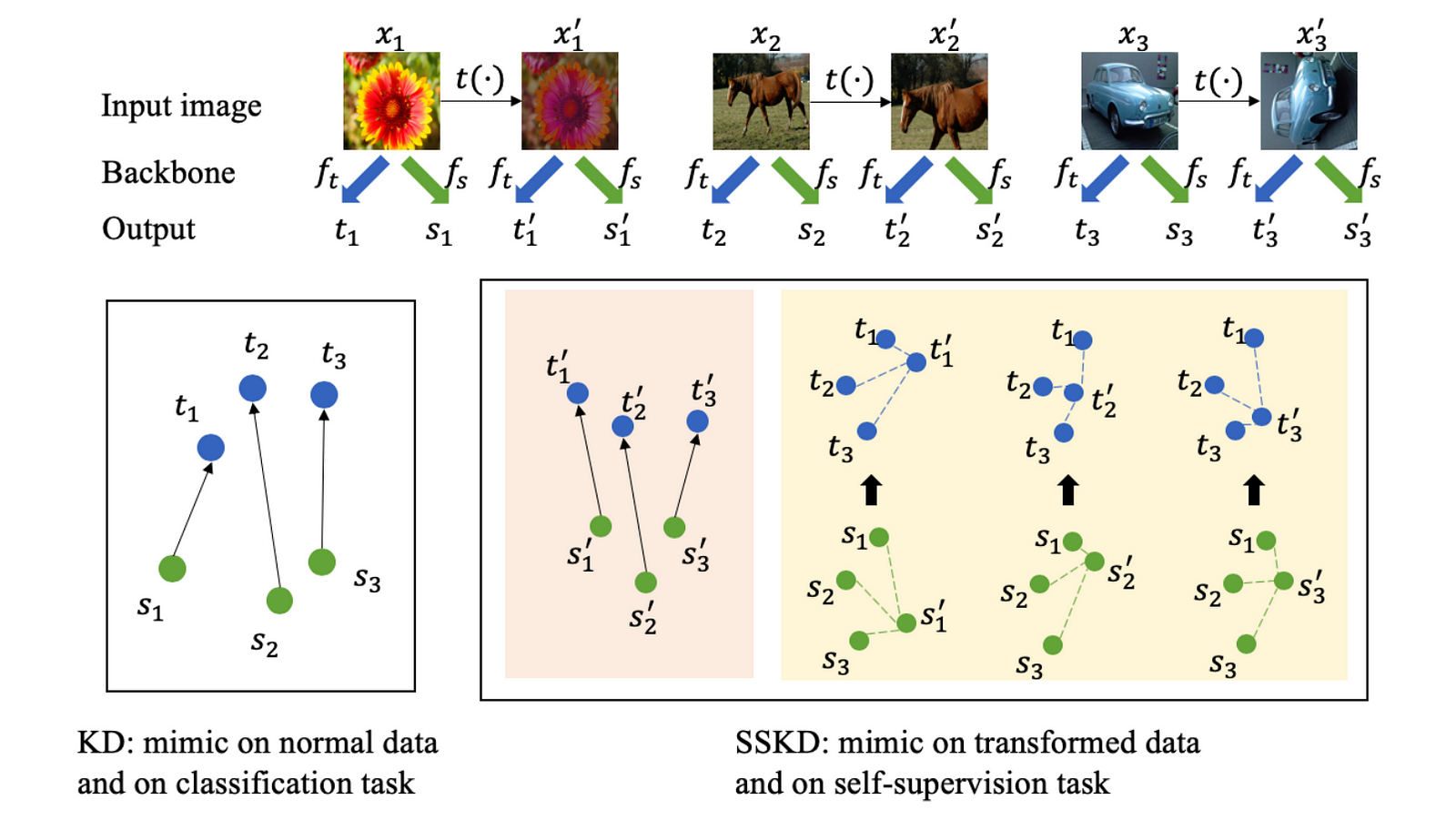

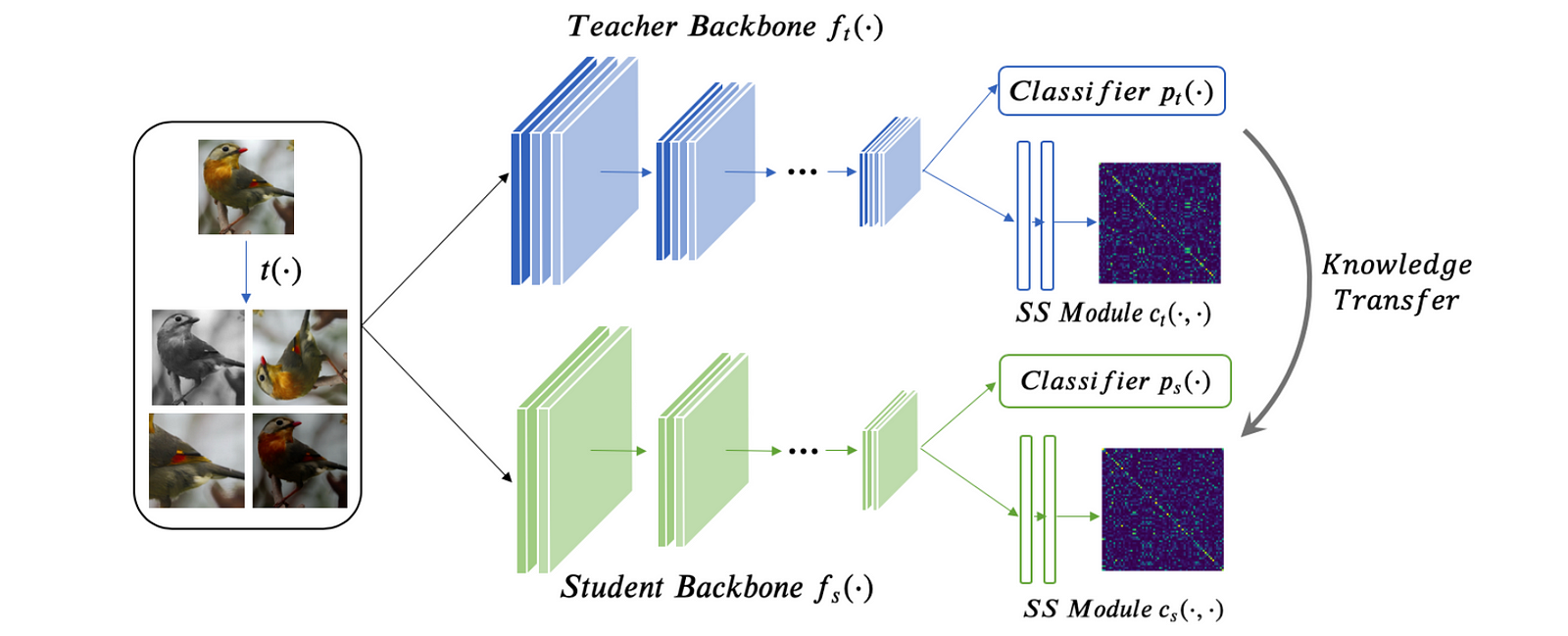

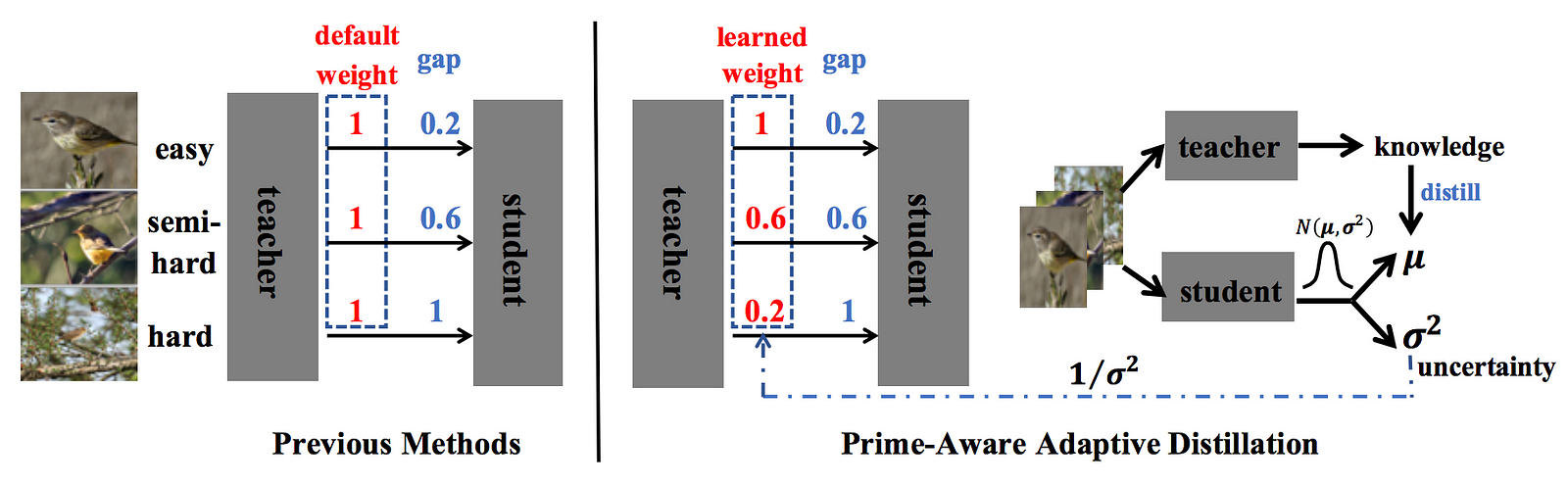

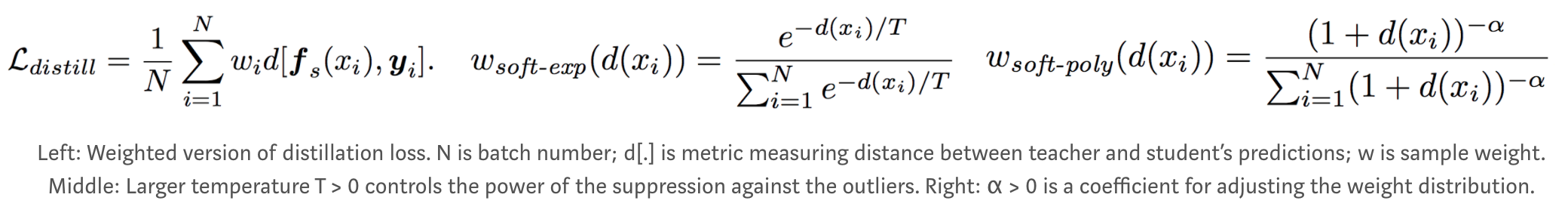

Prior Approaches on Knowledge DistillationBasically, knowledge distillation aims to obtain a smaller student model from typically larger teacher model by matching their information hidden in the model. The information could be: final soft predictions, intermediate features, attentions, relations between samples. See this complete review. Highlights

Methods

|

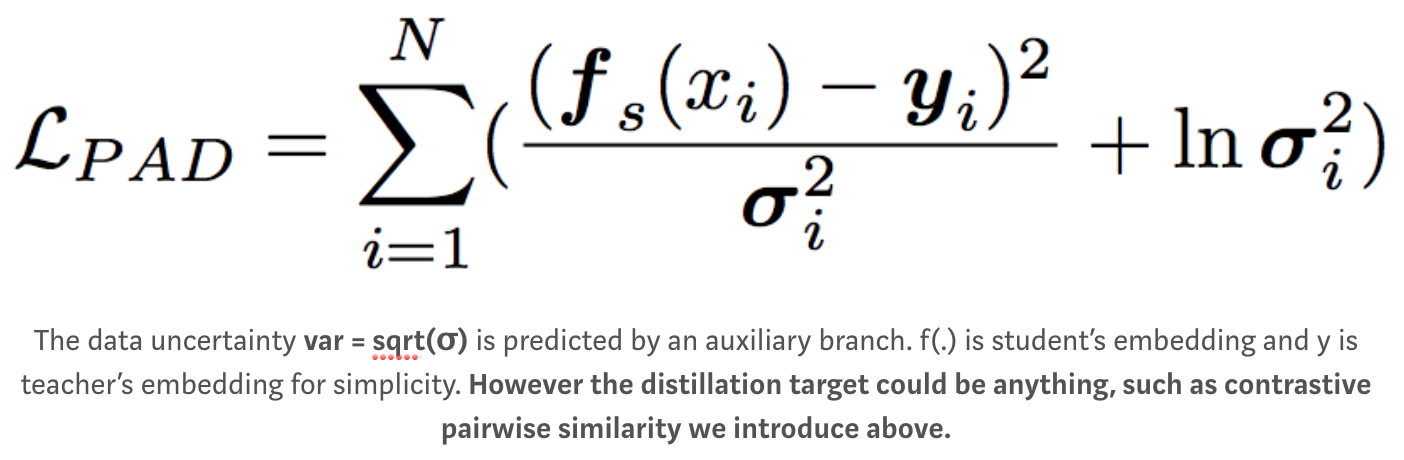

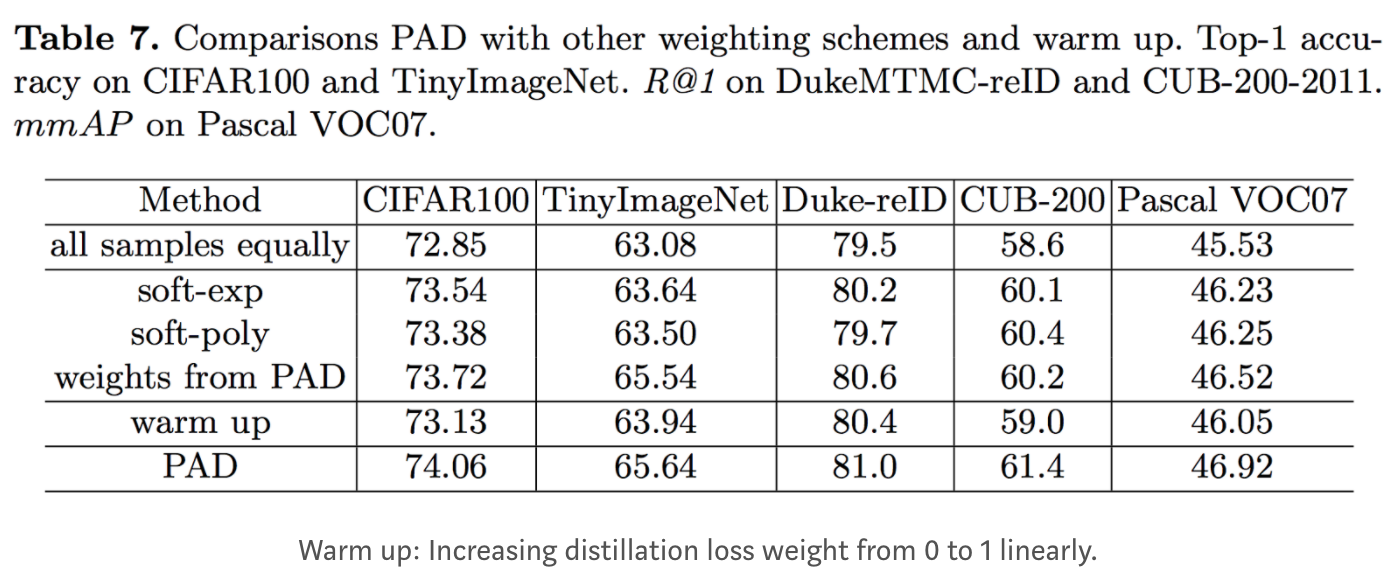

Metadata: Prime-Aware Adaptive Distillation

|

Further Readings

|

Metadata: Knowledge Distillation Meets Self-Supervision

The text was updated successfully, but these errors were encountered: