This composition scheduler can be used to schedule compositions with components that can be temporarily stopped, like EC2 instances, RDS instances/clusters and Redshift clusters. It's aimed at:

- Environments that only run during office hours

- Environments that only run on-demand

Goal is to minimize cost; either by scaling resources to zero or to their most minimal configuration.

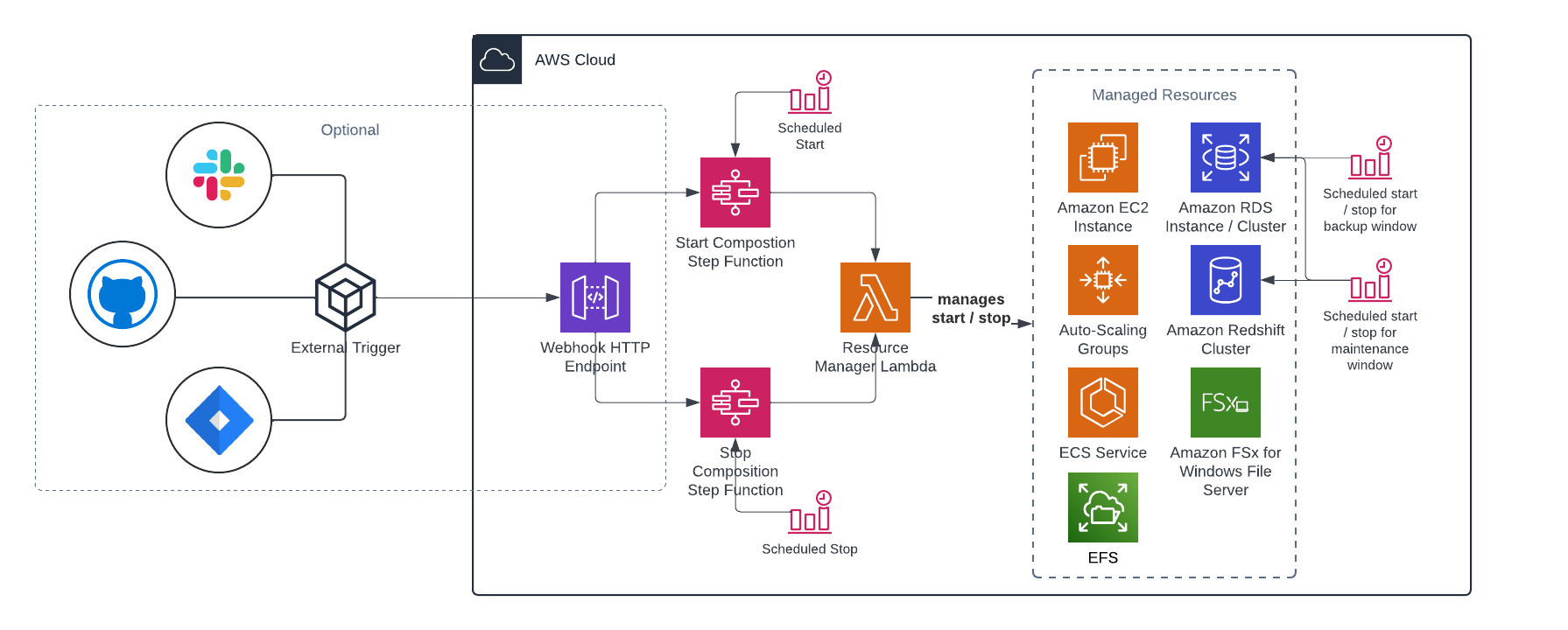

It has the following high level architecture:

The following resoure types and actions can be controlled via this scheduler:

- EC2 Auto-Scaling Groups

- Max size, min size, desired capacity

- EC2 Instances

- Stop/start the instance

- ECS Services

- Set desired tasks

- EFS File Systems

- Set provisioned throughput

- FSx Windows File Systems

- Set throughput capacity

- RDS Clusters

- Stop/start the cluster

- RDS Instances

- Stop/start the instance

- Redshift Clusters

- Pause/resume the cluster

RDS only support stopping instances / clusters that are not running in multi-AZ mode.

Schedules are timezone aware so there's no need to change them on any DST changes, keeping https://docs.aws.amazon.com/scheduler/latest/UserGuide/schedule-types.html#daylist-savings-time in mind.

The order in which resources are started- and stopped is controllable. These operations are asynchronous and wait times between activities are configurable.

The stop procedure of a schedule is the reverse of the start procedure.

When setting up scheduling, the scheduler will check if configured maintenance- and backup windows on RDS instances / clusters and Redshift clusters overlap with the scheduled start- and stop times of a composition. If they don't, an additional schedule will be setup to make sure the cluster is started during the scheduled maintenance- and backup windows.

Optionally a pair of webhooks can be deployed to trigger starting or stopping an environments based on external events. This allows for on-demand starting or stopping of environments by - for example - a service management ticket, a Slack integration, a custom frontend, a Github workflow, etc.

Webhooks require an API key and can be setup to only allow certain IP addresses. A POST request has to be made to one of the outputted endpoints to trigger a webhook.

To setup this module requires a composition of the resources that need to be managed. Based on that input, a state machine is generated. Be aware that the composition dictates order: the resources in the composition are controlled in that order when the composition is started. The order is reversed when the composition is stopped.

Please see the examples folder for code examples of how to implement this module.

Resource types require certain parameters in order to function. It's recommended to fill the parameters by refering to existing resources in your TF code.

| Resource | Resource Type | Required Parameters |

|---|---|---|

| EC2 Auto-Scaling Group | auto_scaling_group | name: the name of the auto-scaling group to control min: the minimal number of instances to run (used on start of composition) max: the maximum number of instances to run (used on start of composition) desired: the desired number of instances to run (used on start of composition) |

| EC2 Instance | ec2_instance | id: the ID of the instance to control |

| ECS Service | ecs_service | cluster_name: the name of the ECS cluster the task runs on desired: the desired number of tasks (used on start of composition) name: the name of the ECS task to control |

| EFS Filesystem | efs_file_system | id: the ID of the filesystem to control provisioned_throughput_in_mibps: the provisioned throughput of the filesystem (used on start of composition) |

| FSX Windows Filesystem | fsx_windows_file_system | id: the ID of the filesystem to control throughput_capacity: the throughput capacity of the filesystem (used on start of composition) |

| RDS Cluster | rds_cluster | id: the ID of the cluster to control |

| RDS Instance | rds_instance | id: the ID of the instance to control |

| Redshift Cluster | redshift_cluster | id: the ID of the cluster to control |

Most of the supported services allow for their own methods of scheduling, either with or without timezone support. This module can not detect existing schedules so overlapping schedules could contradict each other, resulting in unexpected behaviour.

When an RDS instance is stopped, the stopped time does not count towards the retention time of snapshots. Effectively this means that any snapshot will be retained longer than expected, including any associated cost.

This behaviour is described here: https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/USER_ManagingAutomatedBackups.html

For AWS Backup to be able to create a backup of an RDS instance, it needs to be running. This module is currently not capable of automatically detecting the schedule of any AWS Backup plans. In such cases you will need to manually align the schedules.

FSx Windows File System provisioned throughput is quite expensive. It could be worthwhile to scale down on provisioned throughput during off-hours. Changing provisioned throughput also affects network I/O, memory and disk I/O of the file system.

See https://docs.aws.amazon.com/fsx/latest/WindowsGuide/performance.html for more information.

Throughput can't be changed until 6 hours after the last change was requested. After a throughput change an optimization phase takes place that could take longer than 6 hours; depending on the size off the file system. Throughput can also not be changed during this optimization phase. Use with care.

See https://docs.aws.amazon.com/fsx/latest/WindowsGuide/managing-storage-configuration.html#managing-storage-capacity for more information.

This module uses the integrated Lambda to abstract some of the more complex functionality away. For redistribution purposes, the following dependencies have been vendorized:

- pyawscron 1.0.6: https://pypi.org/project/pyawscron/ - https://github.com/pitchblack408/pyawscron/tree/1.0.6

This module is extendable. To add support for more resources, follow these general steps:

-

In the Lambda code

- Add a test

- Add the resource controller

- Add the resource to the handler

- Add the resource to the schema

- Make sure tests pass

-

In the Terraform code

- Add the resource to the validations of the resource_composition variable

- Add IAM permissions as a new dynamic block to the Lambda policy document

- Add an example in the examples folder

- Update this README (including the architecture image if required)

- Make sure validations pass

| Name | Version |

|---|---|

| terraform | >= 1.5.0 |

| archive | >= 2.4.0 |

| aws | >= 5.10.0 |

| Name | Version |

|---|---|

| archive | >= 2.4.0 |

| aws | >= 5.10.0 |

| Name | Source | Version |

|---|---|---|

| api_gateway_role | github.com/schubergphilis/terraform-aws-mcaf-role | v0.3.3 |

| eventbridge_scheduler_role | github.com/schubergphilis/terraform-aws-mcaf-role | v0.3.3 |

| lambda_role | github.com/schubergphilis/terraform-aws-mcaf-role | v0.3.3 |

| scheduler_lambda | schubergphilis/mcaf-lambda/aws | ~> 1.1.2 |

| step_functions_role | github.com/schubergphilis/terraform-aws-mcaf-role | v0.3.3 |

| Name | Description | Type | Default | Required |

|---|---|---|---|---|

| composition_name | The name of the controlled composition | string |

n/a | yes |

| kms_key_arn | The ARN of the KMS key to use with the Lambda function | string |

n/a | yes |

| resource_composition | Resource composition | list(object({ |

n/a | yes |

| start_resources_at | Resources start cron expression in selected timezone | string |

n/a | yes |

| stop_resources_at | Resources stop cron expression in selected timezone | string |

n/a | yes |

| tags | Mapping of tags | map(string) |

{} |

no |

| timezone | Timezone to execute schedules in | string |

"UTC" |

no |

| webhooks | Deploy webhooks for external triggers from whitelisted IP CIDR's. | object({ |

{ |

no |

| Name | Description |

|---|---|

| api_gateway_stage_arn | n/a |

| start_composition_state_machine_arn | n/a |

| start_composition_webhook_url | n/a |

| stop_composition_state_machine_arn | n/a |

| stop_composition_webhook_url | n/a |

| webhook_api_key | n/a |