diff --git a/README.md b/README.md

index c876737..5301832 100644

--- a/README.md

+++ b/README.md

@@ -1,10 +1,12 @@

-# Resource Scheduler

+# :clock1: Resource Scheduler

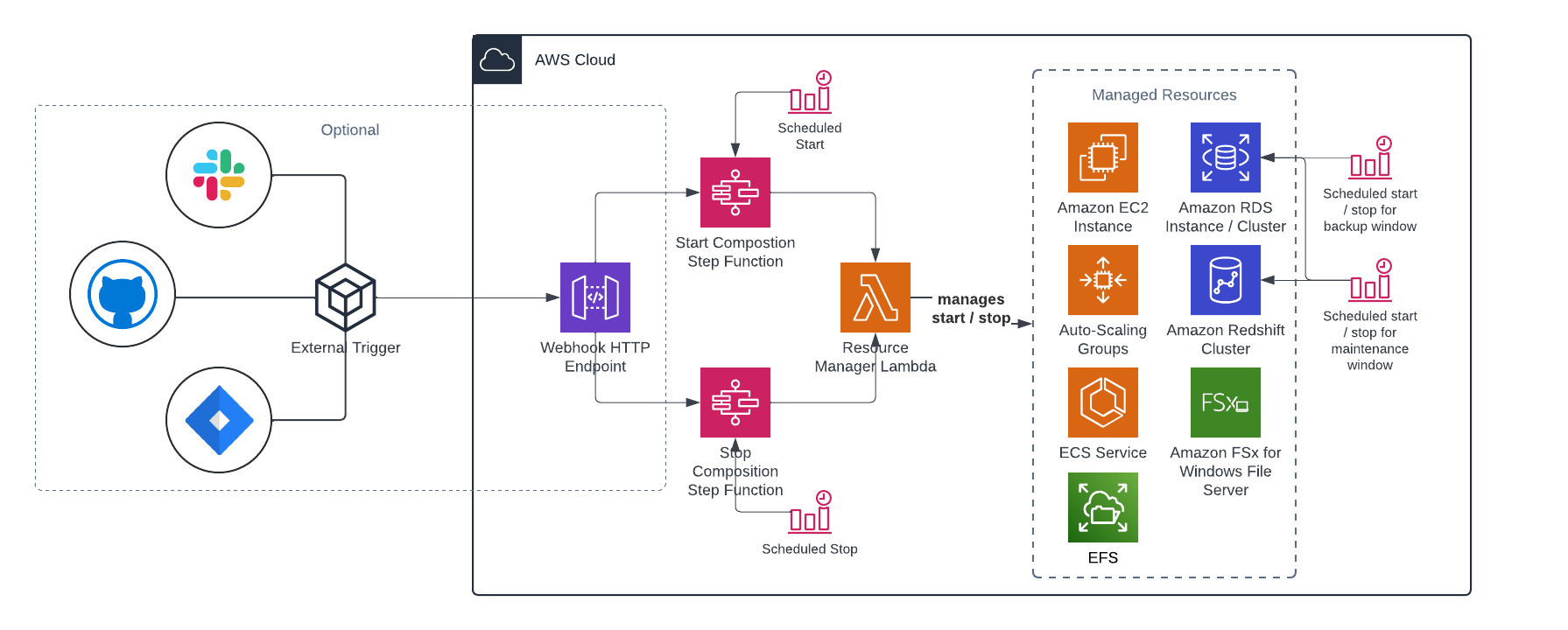

This composition scheduler can be used to schedule compositions with components that can be temporarily stopped, like EC2 instances, RDS instances/clusters and Redshift clusters. It's aimed at:

* Environments that only run during office hours

* Environments that only run on-demand

+Goal is to minimize cost; either by scaling resources to zero or to their most minimal configuration.

+

It has the following high level architecture:

@@ -21,6 +23,8 @@ The following resoure types and actions can be controlled via this scheduler:

* Stop/start the instance

* ECS Services

* Set desired tasks

+* EFS File Systems

+ * Set provisioned throughput

* FSx Windows File Systems

* Set throughput capacity

* RDS Clusters

@@ -67,6 +71,7 @@ Resource types require certain parameters in order to function. It's recommended

| EC2 Auto-Scaling Group | auto_scaling_group | **name:** the name of the auto-scaling group to control

**min:** the minimal number of instances to run (used on start of composition)

**max:** the maximum number of instances to run (used on start of composition)

**desired:** the desired number of instances to run (used on start of composition) |

| EC2 Instance | ec2_instance | **id:** the ID of the instance to control |

| ECS Service | ecs_service | **cluster_name:** the name of the ECS cluster the task runs on

**desired:** the desired number of tasks (used on start of composition)

**name:** the name of the ECS task to control |

+| EFS Filesystem | efs_file_system | **id:** the ID of the filesystem to control

**provisioned_throughput_in_mibps:** the provisioned throughput of the filesystem (used on start of composition) |

| FSX Windows Filesystem | fsx_windows_file_system | **id:** the ID of the filesystem to control

**throughput_capacity:** the throughput capacity of the filesystem (used on start of composition) |

| RDS Cluster | rds_cluster | **id:** the ID of the cluster to control |

| RDS Instance | rds_instance | **id:** the ID of the instance to control |

@@ -116,10 +121,10 @@ This module is extendable. To add support for more resources, follow these gener

1. Make sure tests pass

1. In the Terraform code

- 1. Add the resource to the resource_composition variable

- 1. Add validation to the resource_composition variable

- 1. Add an example

- 1. Update this README

+ 1. Add the resource to the validations of the resource_composition variable

+ 1. Add IAM permissions as a new dynamic block to the Lambda policy document

+ 1. Add an example in the examples folder

+ 1. Update this README (including the architecture image if required)

1. Make sure validations pass

diff --git a/docs/architecture.png b/docs/architecture.png

index 1bb7677..24934b1 100644

Binary files a/docs/architecture.png and b/docs/architecture.png differ

diff --git a/examples/webhooks/main.tf b/examples/webhooks/main.tf

index f56bc6d..05565af 100644

--- a/examples/webhooks/main.tf

+++ b/examples/webhooks/main.tf

@@ -12,6 +12,13 @@ module "scheduler" {

"id" : "application-cluster-1"

}

},

+ {

+ "type" : "efs_file_system",

+ "params" : {

+ "id" : "efs-file-system-1",

+ "provisioned_throughput_in_mibps" : 128

+ }

+ },

{

"type" : "wait",

"params" : {

diff --git a/iam.tf b/iam.tf

index 0ad29bc..b389742 100644

--- a/iam.tf

+++ b/iam.tf

@@ -191,6 +191,23 @@ data "aws_iam_policy_document" "lambda_policy" {

]

}

}

+

+ dynamic "statement" {

+ for_each = contains(local.resource_types_in_composition, "efs_file_system") ? toset(["efs_file_system"]) : toset([])

+

+ content {

+ effect = "Allow"

+ actions = [

+ "elasticfilesystem:DescribeFileSystems",

+ "elasticfilesystem:UpdateFileSystem"

+ ]

+ resources = [

+ for resource in var.resource_composition :

+ "arn:aws:elasticfilesystem:${data.aws_region.current.name}:${data.aws_caller_identity.current.id}:file-system/${resource.params["id"]}"

+ if resource.type == "efs_file_system"

+ ]

+ }

+ }

}

module "lambda_role" {

diff --git a/scheduler/scheduler/resource_controllers/efs_file_system_controller.py b/scheduler/scheduler/resource_controllers/efs_file_system_controller.py

new file mode 100644

index 0000000..95f0285

--- /dev/null

+++ b/scheduler/scheduler/resource_controllers/efs_file_system_controller.py

@@ -0,0 +1,59 @@

+import boto3

+from typing import Tuple

+

+from scheduler.resource_controller import ResourceController

+

+EFS_MINIMAL_THROUGHPUT_CAPACITY = 1.0

+

+efs = boto3.client("efs")

+

+

+class EfsFileSystemController(ResourceController):

+ def __init__(

+ self,

+ id: str,

+ provisioned_throughput_in_mibps: str,

+ ):

+ super().__init__()

+ self.id = id

+ self.provisioned_throughput_in_mibps = float(

+ int(provisioned_throughput_in_mibps)

+ )

+

+ def start(self) -> Tuple[bool, str]:

+ file_system = efs.describe_file_systems(FileSystemId=self.id)["FileSystems"][0]

+ if file_system["FileSystems"][0]["ThroughputMode"] != "provisioned":

+ return (

+ False,

+ f"EFS Filesystem {self.id} is not in provisioned throughput mode",

+ )

+

+ efs.update_file_system(

+ FileSystemId=self.id,

+ ThroughputMode="provisioned",

+ ProvisionedThroughputInMibps=self.provisioned_throughput_in_mibps,

+ )

+

+ return (

+ True,

+ f"Throughput capacity for {self.id} adjusted to {self.provisioned_throughput_in_mibps} MB/s",

+ )

+

+ def stop(self) -> Tuple[bool, str]:

+ file_system = efs.describe_file_systems(FileSystemId=self.id)["FileSystems"][0]

+ if file_system["FileSystems"][0]["ThroughputMode"] != "provisioned":

+ return (

+ False,

+ f"EFS Filesystem {self.id} is not in provisioned throughput mode",

+ )

+

+ efs.update_file_system(

+ FileSystemId=self.id,

+ ThroughputMode="provisioned",

+ ProvisionedThroughputInMibps=EFS_MINIMAL_THROUGHPUT_CAPACITY,

+ )

+

+ return (

+ True,

+ f"Throughput capacity for {self.id} adjusted to {EFS_MINIMAL_THROUGHPUT_CAPACITY} MB/s",

+ )

diff --git a/scheduler/scheduler/resource_controllers/fsx_windows_file_system_controller.py b/scheduler/scheduler/resource_controllers/fsx_windows_file_system_controller.py

index 84bbc97..7d959cf 100644

--- a/scheduler/scheduler/resource_controllers/fsx_windows_file_system_controller.py

+++ b/scheduler/scheduler/resource_controllers/fsx_windows_file_system_controller.py

@@ -3,7 +3,7 @@

from scheduler.resource_controller import ResourceController

-MINIMAL_THROUGHPUT_CAPACITY = 32

+FSX_MINIMAL_THROUGHPUT_CAPACITY = 32

fsx = boto3.client("fsx")

@@ -25,15 +25,17 @@ def start(self) -> Tuple[bool, str]:

)

return (

True,

- f"Throughput capacity for {self.id} started adjusted to {self.throughput_capacity} MB/s",

+ f"Throughput capacity for {self.id} started adjustment to {self.throughput_capacity} MB/s",

)

def stop(self) -> Tuple[bool, str]:

fsx.update_file_system(

FileSystemId=self.id,

- WindowsConfiguration={"ThroughputCapacity": MINIMAL_THROUGHPUT_CAPACITY},

+ WindowsConfiguration={

+ "ThroughputCapacity": FSX_MINIMAL_THROUGHPUT_CAPACITY

+ },

)

return (

True,

- f"Throughput capacity for {self.id} started adjusted to {MINIMAL_THROUGHPUT_CAPACITY} MB/s",

+ f"Throughput capacity for {self.id} started adjustment to {FSX_MINIMAL_THROUGHPUT_CAPACITY} MB/s",

)

diff --git a/scheduler/scheduler/scheduler.py b/scheduler/scheduler/scheduler.py

index c0bd832..723405a 100644

--- a/scheduler/scheduler/scheduler.py

+++ b/scheduler/scheduler/scheduler.py

@@ -4,11 +4,14 @@

import scheduler.schemas as schemas

from scheduler.cron_helper import extend_windows

-from scheduler.resource_controllers.ec2_instance_controller import Ec2InstanceController

-from scheduler.resource_controllers.ecs_service_controller import EcsServiceController

from scheduler.resource_controllers.auto_scaling_group_controller import (

AutoscalingGroupController,

)

+from scheduler.resource_controllers.ec2_instance_controller import Ec2InstanceController

+from scheduler.resource_controllers.ecs_service_controller import EcsServiceController

+from scheduler.resource_controllers.efs_file_system_controller import (

+ EfsFileSystemController,

+)

from scheduler.resource_controllers.fsx_windows_file_system_controller import (

FsxWindowsFileSystemController,

)

@@ -65,6 +68,10 @@ def handler(event, _context) -> Dict:

success, msg = FsxWindowsFileSystemController(**params).start()

case ("fsx_windows_file_system", "stop"):

success, msg = FsxWindowsFileSystemController(**params).stop()

+ case ("efs_file_system", "start"):

+ success, msg = EfsFileSystemController(**params).start()

+ case ("efs_file_system", "stop"):

+ success, msg = EfsFileSystemController(**params).stop()

if success:

logger.info(msg)

diff --git a/scheduler/scheduler/schemas.py b/scheduler/scheduler/schemas.py

index 05be203..018c134 100644

--- a/scheduler/scheduler/schemas.py

+++ b/scheduler/scheduler/schemas.py

@@ -15,6 +15,7 @@

"cron_helper",

"ec2_instance",

"ecs_service",

+ "efs_file_system",

"fsx_windows_file_system",

"rds_cluster",

"rds_instance",

@@ -86,6 +87,19 @@

"throughput_capacity",

],

},

+ "efs_file_system_params": {

+ "type": "object",

+ "properties": {

+ "id": {

+ "type": "string",

+ },

+ "provisioned_throughput_in_mibps": {"type": "string"},

+ },

+ "required": [

+ "id",

+ "provisioned_throughput_in_mibps",

+ ],

+ },

},

"allOf": [

{"required": ["action", "resource_type"]},

diff --git a/scheduler/tests/test_scheduler.py b/scheduler/tests/test_scheduler.py

index b4d5db7..673448c 100644

--- a/scheduler/tests/test_scheduler.py

+++ b/scheduler/tests/test_scheduler.py

@@ -203,5 +203,28 @@ def test_scheduler_fsx_windows_file_system_stop(lambda_context):

}

}

+def test_scheduler_efs_file_system_start(lambda_context):

+ payload = {

+ "resource_type": "efs_file_system",

+ "action": "start",

+ "efs_file_system_params": {

+ "id": "fs-1234567890",

+ "provisioned_throughput_in_mibps": "128",

+ }

+ }

+

+ with pytest.raises(NoCredentialsError):

+ handler(payload, lambda_context)

+

+def test_scheduler_efs_file_system_stop(lambda_context):

+ payload = {

+ "resource_type": "efs_file_system",

+ "action": "stop",

+ "efs_file_system_params": {

+ "id": "fs-1234567890",

+ "provisioned_throughput_in_mibps": "128",

+ }

+ }

+

with pytest.raises(NoCredentialsError):

handler(payload, lambda_context)

diff --git a/variables.tf b/variables.tf

index 6be1836..0fd5773 100644

--- a/variables.tf

+++ b/variables.tf

@@ -11,7 +11,7 @@ variable "resource_composition" {

description = "Resource composition"

validation {

- condition = length([for r in var.resource_composition : r if contains(["ec2_instance", "rds_instance", "rds_cluster", "auto_scaling_group", "ecs_service", "redshift_cluster", "wait", "fsx_windows_file_system"], r.type)]) == length(var.resource_composition)

+ condition = length([for r in var.resource_composition : r if contains(["ec2_instance", "rds_instance", "rds_cluster", "auto_scaling_group", "ecs_service", "redshift_cluster", "wait", "fsx_windows_file_system", "efs_file_system"], r.type)]) == length(var.resource_composition)

error_message = "Resource type must be one of ec2_instance, rds_instance, rds_cluster, auto_scaling_group, ecs_service, redshift_cluster or fsx_windows_file_system"

}

@@ -30,6 +30,11 @@ variable "resource_composition" {

error_message = "ECS Service resources must have 'cluster_name', 'desired' and 'name' parameters"

}

+ validation {

+ condition = !contains([for r in var.resource_composition : (r.type == "efs_file_system" ? keys(r.params) == tolist(["id", "provisioned_throughput_in_mibps"]) : true)], false)

+ error_message = "EFS Filesystem resources must have 'id' and 'provisioned_throughput_in_mibps' parameters"

+ }

+

validation {

condition = !contains([for r in var.resource_composition : (r.type == "fsx_windows_file_system" ? keys(r.params) == tolist(["id", "throughput_capacity"]) : true)], false)

error_message = "FSx Windows Filesystem resources must have 'id' and 'throughput_capacity' parameters"