https://team-1616393125888.atlassian.net/jira/software/projects/DEV/boards/1

- Scrum Roles

- Scope

- Workflows

- Requirements

- Project Planning

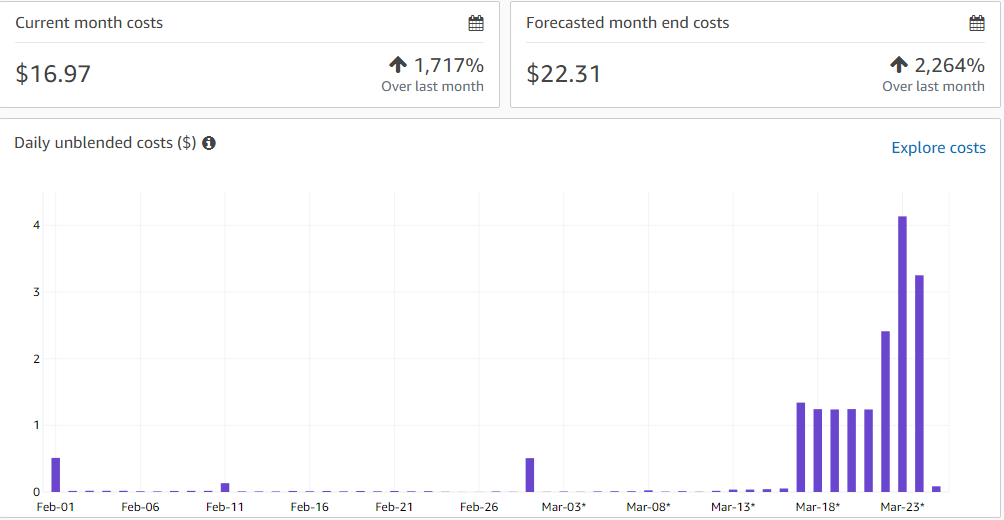

- Budget

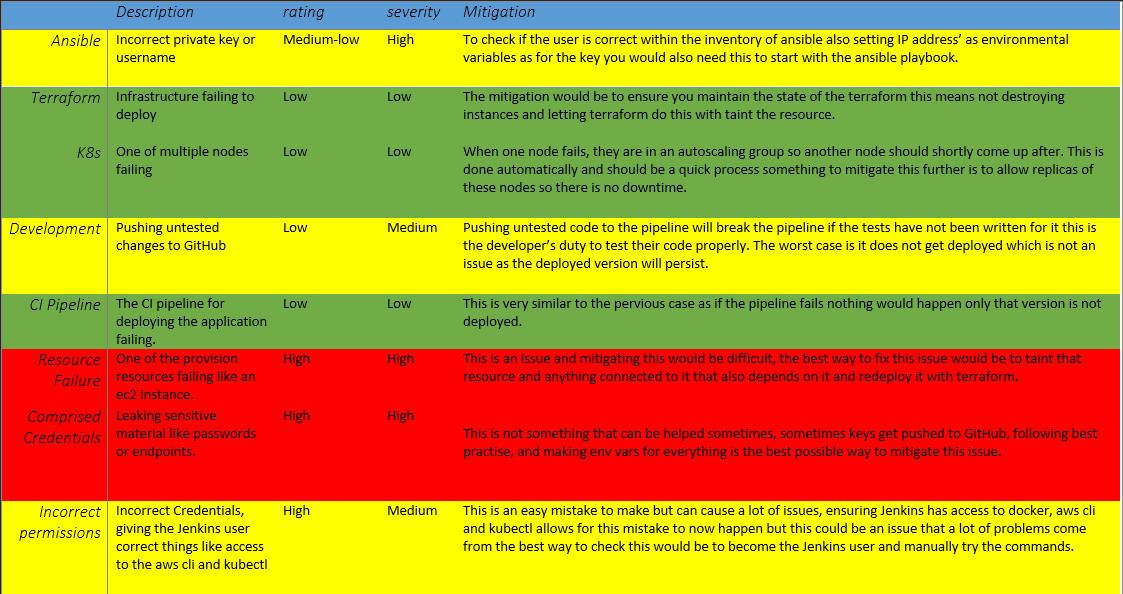

- Risk Assessment

- Terraform

- Ansible

- Kubernetes

- Jenkins

- Improvements

This is a group challenge to see what we can produce and how well we can apply the knowledge we have gained over the last 12 weeks, as well as gaining experience working to complete a deliverable as part of a team which will be invaluable experience for future roles.

In short, we are expected to deploy the following applications:

This project was to deploy an application of our choosing we went with something one of us developed previous to the course, an unfinished but working API and frontend. the frontend is using react and the backend is using python.

This project should demonstrate a deployment process thats not dependant on the application thats being deployed.

For this project we all worked in an agile way assuming roles and having daily standup's, understanding the issues brought up in the standup was a major factor in us being able to succeed in this project. We also did a retrospective after this current sprint which was our only sprint, lasting 4 days with our project demo on the 5th day.

To plan, design, and implement a solution for automating the development workflows and deployments of this application.

Using an agile board on Jira to manage product backlogs and keep the whole team aware of progression.

Jack Pendlebury - Project Manager

Andrea Torres - Development Team

Dale Walker - Development Team

Bilal Shafiq - Product Owner

Lee Ashworth - Scrum Master

For support and help during during this project

- The QA team

The budget for this project was £20 for the week, this was easily met by maintaining all of our resources within terraform meaning at the end of the day we could pull them all down and at the start of the day we could quickly put them all back up.

Terraform is a tool for building and changing infrastructure efficiently. It is probably the most common infrastructure as code tools, allowing you to describe using a high level configuration. All the infrastructure created can also be shared and reused.

For this project, learning and using Terraform has been great, it's such an amazing tool, it makes creating and building infrastructure so much easier, this is one of the main reasons we decided to use it for this project.

and this should apply all of the infrastructures and build it on AWS. this takes about 15 minutes on average, but the great thing about Terraform is, it will save the current state, meaning you can change something and it will apply it without destroying all of the none affected files. For this project terraform was the first thing we worked on, having the infrastructure from the start allowed us to easily put up and take down the infrastructure when it wasn't being used.

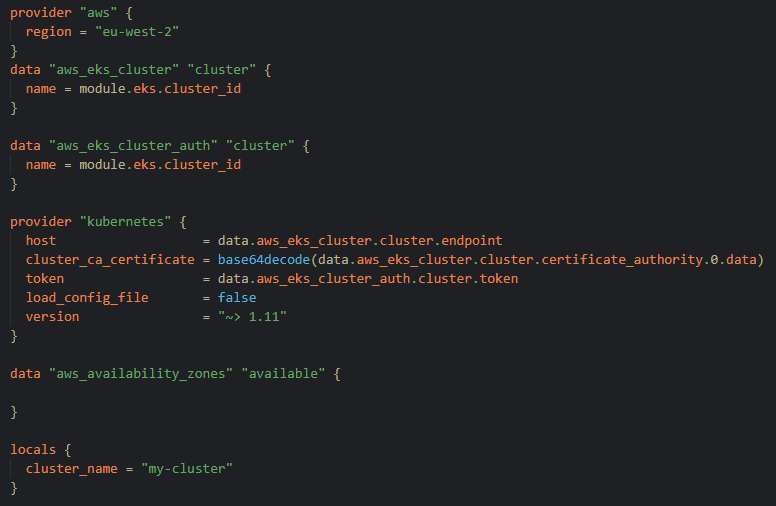

For Terraform, we wanted all of our infrastructure to be deployed with it, we wanted a k8 cluster with EKS and 2 instances with EC2 one for Jenkins and one for our bastion server.

This would be done from any machine when logged into the correct IAM user with env variables we also can set up the bastion server to connect to the cluster.

For the jenkins machine what we did was we using the current state of the machine with things installed and logged into the aws cli as an ami, meaning we could replicate it each time in a controlled manor

$aws configure$terraform init$terraform apply$aws eks --region eu-west-2 update-kubeconfig --name my-cluster$rm ~/.kube/config$terraform state list | grep auth$terraform state rm <thing>applying infrastructure changes is fast and simple when using terraform

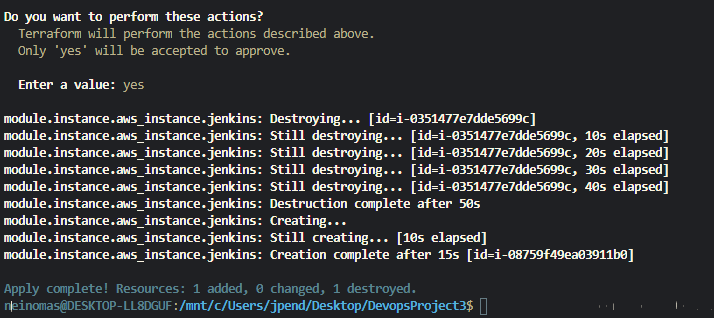

Another reason why we used terraform is the fact the it knows its own state, which means when applying new infrastructure it will replace and tear down the old infrastructure. this also means when you make changes you don't need to wait for the whole infrastructure to tear down you can just apply new infrastructure.

When something isn't working you can also use the command:

$terraform taintWhich then allows you to taint the resource that isn't working to tear it down and then apply it again, this as a whole saves a lot of time as you don't need to destroy everything each time.

some of the notable things we used Terraform for is for our k8 cluster, our jenkins CI server and finally the bastion server.

For the Jenkins server we also used custom images to allow us to retain the jenkins install as well as our pipeline and user accounts. The reason we did this is if the ec2 instance failed and we would need to re-deploy, this would be a quick simple fix. We also hope to automate this process by using snapshots of the instance and feeding them back into terraform with image versions.

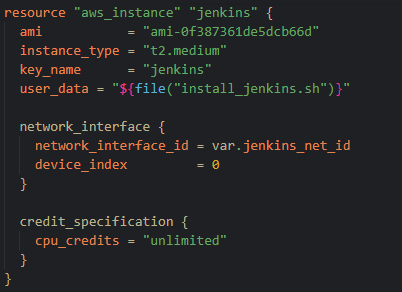

The final thing we did with Terraform was something we could have done with ansible, but we decided it would be best to do with terraform. This was install jenkins, docker and finally adding them to the jenkins user. This script is now not very useful as this allow us to save a snapshot and turn that into an image we are now using.

Ansible is an open-soruce agentless configureation management and application-deployment tool enabling infrastructure as code.

In this project we primary used ansible to configure all of our virtual machines by installing crucial packages, we achieved this by ssh proxying through our bastion server so ansible could security access all the virtual machines in the infrastructure network and configure them accordingly.

We achieve the ssh proxy through the bastion server from our local machine by setting an ansible variable that specifies the location of the private ssh bastion key on our local machine, then by using the follow ansible command string:

ProxyCommand="ssh -W %h:%p -q user@bastion ip address"This command allows us to proxy ssh through the specified bastion ip address as the specified user on the condition that we have the correct path for the private key of the bastion server set as a variable.

We used ansible galaxy roles to define our configuration settings the roles used were:

• Ping - The ping role is configured to ping the ansible host and check for the response "pong" and a hello from "external ip address of host" to display via ansible variable, This will confirm the successful exchange of packets and access to the virtual machine bash shell.

• Kubectl - The kubectl role is configured to install the kubernetes kubectl package on each cluster node as well as the ci-cd server to allow infrastructure wide kubernetes cluster access management.

It will then display the installed version of kubectl as an ansible variable....

• Aws-cli - The Aws-cli role is configured to install the amazon-web-services command line interface onto the ci-cd server to allow programmatic access via iam user to the virtual private cloud(vpc).

It will then display the installed version of Aws-cli as an ansible variable.

• Jenkins - The jenkins role is configured to install the java openjdk dependency package then install, start and display the init admin password for jenkins as an ansible variable.

Kubernetes is an open-source container orchestration platform that automates many of the manual processes involved in deploying, managing, and scaling containerized applications.

We are going to deploy two applications using Kubernetes.

1.Frontend: React App

2.Backend: FastAPI App

How are we going to do it?

- We will build the images of our two applications using Docker & Docker-Compose.

- Dockerfiles

- Docker-compose.yaml

- We will create a deployment/pod of the backend application:

Deployment / Pod - Backend

In order for this deployment to communicate continuously with the rest of the components of the cluster, we will create an internal service called backend-service.

Service - Backend

- We will do the same with our frontend application. Firstly, we will create a deployment/pod:

Deployment / Pod - Frontend

Secondly, we will create an internal service (frontend - service).

Service - Frontend

- The NGINX pod will be the component that joins the frontend application and the backend application.

Deployment / Pod - Nginx

All HTTP requests will go through the NGINX pod, but will then be sent to the correct service based on the requested URL.

We will keep the nginx configuration data separate from the application code, in a ConfigMap file.

ConfigMap - nginx-config

- Finally, we will need these two services that are deployed internally in Kubernetes, to be exposed to the outside world. To do this, we will create an external service (Load Balancer) that will allow our NGINX pod to receive external requests.

Service - Load Balancer

The request that will come from the browser, will go to the external service (Load Balancer) that will redirect it to the Nginx pod. The Nginx pod will send the request to the correct internal service (Frontend or Backend). From there, the request will be redirected to either the frontend or backend pod.

In the event that the request goes to the backend application (backend pod), this pod will also communicate with a database that will be hosted on Amazon RDS.

Jenkins is a free and open source automation server. It helps automate the parts of software development related to building, testing, and deploying, facilitating continuous integration and continuous delivery.

It is a agent-based system that runs in servlet containers such as Apache Tomcat, it supports version control tools including AccuRev, CVS, Subversion and Git, jenkins can execute arbitrary shell scripts and Windows batch commands.

In this project jenkins was ultilised in an pipeline configuration with the use of github webhooks to orchestrate every step of automated product deployment, these stages were:

• Declarative Checkout SCM - The first stage triggered by a webhook is the source code management acquisition where jenkins will create a blank workspace and navigate to the github url repository clone it, switch into it and then checkout to the specified branch.

• Testing - The second stage is the application testing stage where both the front-end and back-end will be testing using the specified bash testing script, test files and test tool.

• Build Images - The third stage is the dockerization of the front-end and the back-end using the docker-compose.yaml to build the contents into a snapshop image with all the required dependencies to deploy the application as a container or pod.

• Push - The fourth stage push`s the docker images built in the previous stage to dockerhub.

• Deployment - The fifth and final stage of the pipeline is to pulldown the previously built docker images from dockerhub, then deploy them in the terraform built kubernetes cluster using the bash deployment.sh script.

Some future things we could work on would be to introduce more tools, in hopes to lower complexity and achieve better security.

We were hoping to automated as much as possible within this project, one of the things we didn't manage because of time restraints were automating the snapshots of the jenkins ec2 instance, this was in hopes of saving its state.

For another future improvement we would liked to have tested the application before pushing the application but this was an external application with no tests for it, and with a very limited time frame testing wasn't an option we could do.

Finally we would have liked to automate ansible for configuration, we decided later in the project that we wouldn't need ansible but we hoped we could still use it. The problem came when we realised the infrastructure in Terraform wouldn't support outputting private ip address' of an eks cluster by design.