-

Notifications

You must be signed in to change notification settings - Fork 790

Change parameters

EDIT Newest versions of RTAB-Map have most of these parameters set by default. I recommend to start with the default values, then look at this page for fine tuning.

I will show in this page how we can speed-up RTAB-Map for small environments and computers with less computing power. This example will also use only codes under the BSD License, so without SIFT or SURF here.

The referred configuration can be downloaded here: config.ini. You must have at least RTAB-Map 0.7. You can load this configuration file in RTAB-Map with the Preferences->General settings (GUI)->"Load settings..." button or see the screenshots below to adjust the parameters manually. Important: This configuration may use different descriptors (size/type) from your actual configuration. If you have already created a database, you must delete it first (Edit -> Delete memory) to start with a clean database, or you will have an error like this:

[ERROR] (2014-10-02 15:01:07) VWDictionary.cpp:399::rtabmap::VWDictionary::addNewWords() Descriptors (size=16) are not the same size as already added words in dictionary(size=64)

I will show each panel from the Preferences dialog with the corresponding parameters of the configuration file (*.ini). Important parameters are identified on the screenshots.

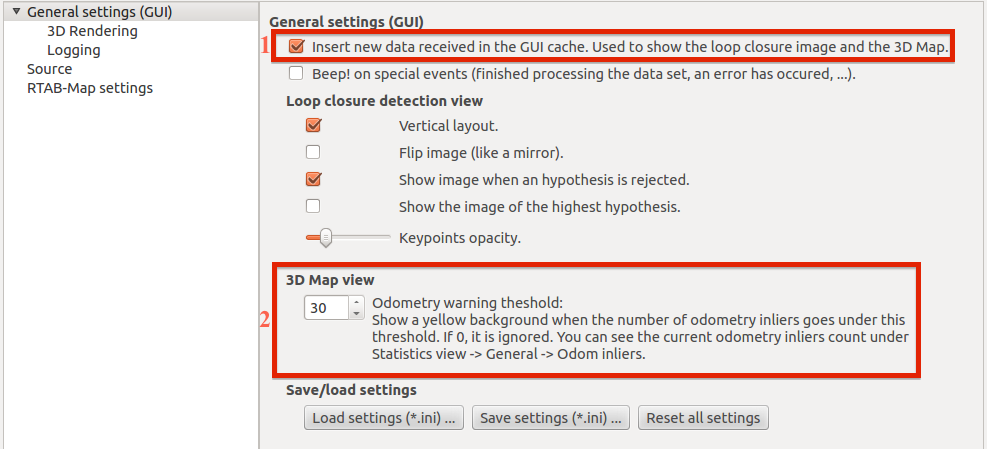

- This parameter should be true to update the 3D map.

- When odometry inliers between consecutive images are below this threshold, the background of the 3D map becomes yellow to warn the user that the scanning area doesn't have many discriminative features. Staying in this zone would cause odometry to loose tracking (red background).

- Don't show multiple clouds for the same scanned area. Clouds taken from the same point of view will be filtered to keep only the most recent one. Less point clouds shown will increase display performance.

- Radius of the filtering zone.

- Angle of the filtering zone.

- On a computer with limited graphic ressources, high decimation of the point clouds will increase the display performance.

- Increase input rate to 20/30 Hz to feed the fast odometry approach used below.

- Activate SLAM mode if you want to extend the current map. Deactivate to just localize in a prebuilt map.

- Increasing detection rate will increase the number of loop closures found when returning to a previous area, so more constraints used for the map optimization and possibly a better map quality. Increasing the detection rate will increase the map size too, so more processing power are required. At 2 Hz, the visual dictionary will increase in size 2x times faster than at 1 Hz.

- The data buffer size should be set at 1 to only use the more recent odometry data available.

- In this example, we assume that we are scanning a small area for which the real-time limit will not be reached, so we deactivate memory management.

- Same thing for the maximum working memory size, set it to infinite.

- "Publish statistics" and "Publish raw sensor data" should be true to send map data to GUI. "Publish pdf" and "Publish likelihood" can be set to false if you don't look at loop closure hypothesis values and likelihood.

- Don't regenerate all prediction matrix of the Bayes filter, just increment removed/added nodes.

- Use tf-idf approach to compute the likelihood, which is faster than comparing images to images.

- Bad signatures should never be ignored in RGB-D SLAM mode.

- Keep raw sensor data in the database to be able to regenerate clouds from the resulting database.

- Keeping rehearsed locations are only helpful for debugging, so don't keep them.

- If you have enough RAM, use database in memory to increase database access speed. Otherwise, if you are limited in RAM, set it to false.

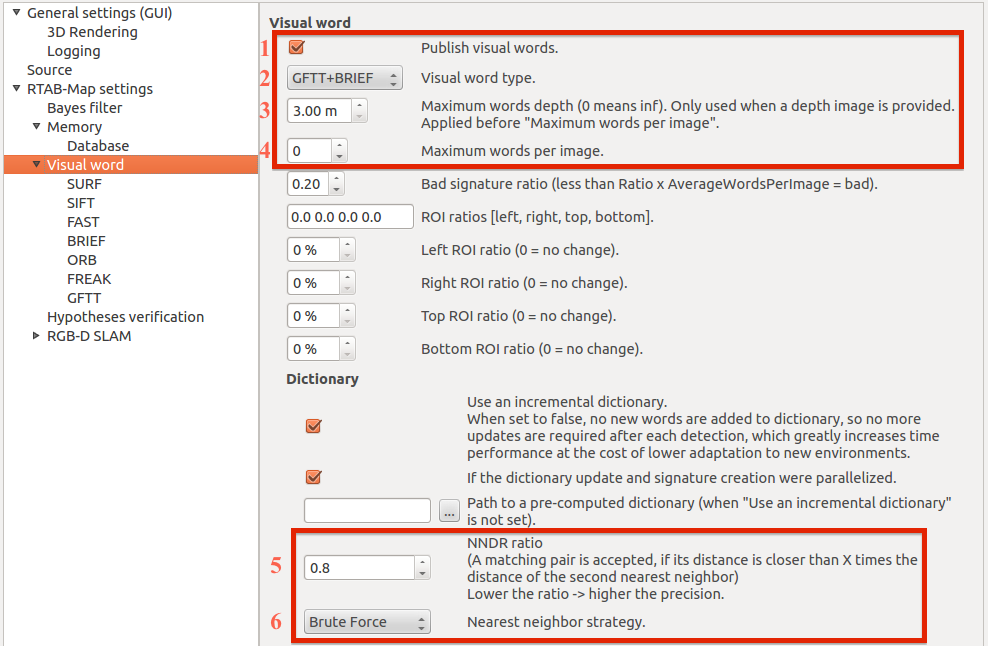

- Publishing visual words is only used to show features on the images in the GUI.

- Here we use GFTT+BRIEF visual dictionary. This binary descriptor can be used under the BSD License. Though less descriptive than SIFT/SURF, for small environnements it works ok. On large-scale environments, SURF/SIFT are preferred. When changing the feature type, you must restart with a clean database (Edit->Delete memory).

- Maximum depth is set to be under the Kinect maximum precision distance.

-

The maximum words per image is set to 0 because GFTT has already this parameter set below.EDIT GFTT now uses this parameter to limit the number of extracted features. So set it to value set below (400). - NNDR (Nearest Neighbor Distance Ratio): Default 0.8.

- We use binary descriptors, so use Brute Force matching (kd-tree is only for float descriptors like SURF/SIFT).

- Use the smallest BRIEF descriptor. Maybe on medium size environment, more discriminative descriptors (size 32/64) would be better for loop closure detection (better likelihood for the Bayes filter).

-

Set maximum of 400 features to track. Increasing this number would increase processing time for odometry and visual dictionary update, though increasing quality of odometry and loop closures detected.EDIT Should be set now on the maximum words per image parameter above.

- Must be checked for RGB-D SLAM.

- We don't refine loop closure constraint transformations (computed visually) to save CPU time. While ICP 2D is useful on a robot with a 2D laser, 3D ICP suffers from difficult problems to detect (see ICP page for a discussion why ICP 3D is not used by default).

- When checked, the referential of the map is set according to the last node added to the map's graph, so the last map created.

- I disabled local loop closure detection because I use the local history approach for odometry. I've found that activating both at the same time results in a lower quality of the map. Local loop closure detection adds also more constraints in the graph, so more time required to optimize the graph (not ideal when RTAB-Map's update rate is fast).

- Higher this threshold, higher the quality of the loop closure transformation computed. However, less loop closures would be accepted.

- It looks like that increasing this threshold to 2 cm (default 1 cm) doesn't decrease a lot the quality of the loop closure transform, because more visual words correspondences are kept.

- We just want good visual words with reliable depth values, which are under 3 m for Kinect-like sensors.

- Binary descriptors are less discriminative than float descriptors, so generally there are less matching visual words between images of a loop closure. Because there are less corresponding visual words, the rejecting rate of the loop closure is higher. Here, we re-extract features from both images and compare them together (without using the visual dictionary), increasing the number of correspondences between the images.

- To not add too much overhead, we use the FAST keypoints detector instead of GFTT used for visual dictionary and odometry. The quality of the keypoints of FAST is lower than with GFTT, but it is very more fast (under 10 ms to extract keypoints).

- Don't limit the number of features extracted, we want the maximum of correspondences between the image.

- Binary desriptors, use Brute Force matching here too.

- The nearest neighbor distance ratio (NNDR) used for matching features.

- With some tests, I've found that GFTT+BRIEF gives the higher reliability across all available binary feature detectors. FAST/ORB detectors are faster than GFTT but they generate less good features to track. FREAK and BRIEF descriptors give similar results.

- Local history size is used to keep a local map of the features instead of using only frame-to-frame odometry. The local map represents the 1000 most discriminative features across the past frames. A big advantage to use the local map is to limit the camera drifting when it is not physically moving. Another advantage is when the camera is moved in an area with less features and the odometry looses tracking, it will be easier to track back with the local map (with at last 1000 features) than only the last frame (that could have very low features and thus more difficult, even not possible, to resolve lost odometry). Local loop closure in time should also be disabled when the local history is enabled.

- It looks like that increasing this threshold to 2 cm (default 1 cm) doesn't decrease a lot the quality of the loop closure transform, because more visual words correspondences are kept.

- Higher this threshold, higher the quality of the loop closure transformation computed. However, there are more chances to lost odometry.

- We just want good visual words with reliable depth values, which are under 3 m for Kinect-like sensors.

- Binary desriptors, use Brute Force matching here too.

- The nearest neighbor distance ratio (NNDR) used for matching features.