Docker image with Uvicorn managed by Gunicorn for high-performance FastAPI web applications in Python 3.8 with performance auto-tuning.

GitHub repo: https://github.com/sebastienmascha/data-science-docker-gunicorn-fastapi

Docker Hub image: https://hub.docker.com/r/smascha/python-uvicorn-fastapi/

- Development with auto-reload

- Development with Jupyter Lab:

jupyter lab --ip=0.0.0.0 --allow-root --NotebookApp.custom_display_url=http://127.0.0.1:8888 - Production with Gunicorn and Uvicorn workers respecting to your machine CPU and RAM info

- Poetry for Python packages and environment management.

Uvicorn is a lightning-fast "ASGI" server.

It runs asynchronous Python web code in a single process.

You can use Gunicorn to manage Uvicorn and run multiple of these concurrent processes.

That way, you get the best of concurrency and parallelism.

FastAPI is a modern, fast (high-performance), web framework for building APIs with Python 3.6+.

The key features are:

- Fast: Very high performance production-ready Python framework, on par with NodeJS and Go (thanks to Starlette and Pydantic).

- Less bugs: Reduce about 40% of human (developer) induced errors. *

- Intuitive: Great editor support. Completion everywhere. Less time debugging.

- Standards-based: Based on (and fully compatible with) the open standards for APIs: OpenAPI (previously known as Swagger) and JSON Schema.

Note: FastAPI is based on Starlette and adds several features on top of it. Useful for APIs and other cases: data validation, data conversion, documentation with OpenAPI, dependency injection, security/authentication and others.

By default, the dependencies are managed with Poetry, go there and install it.

Configuring poetry to create venv directories inside the project:

poetry config virtualenvs.in-project trueFrom ./backend/app/ you can install all the dependencies with:

$ poetry installThen you can start a shell session with the new environment with:

$ poetry shell Then run the Python App or Jupyter Lab

$ cd ..

$ set -o allexport; source .env; source .env_development; set +o allexport # To check for .env file

$ sh start-reload.sh # Python App

$ jupyter lab # Jupyter LabNext, open your editor at ./backend/app/ (instead of the project root: ./), so that you see an ./app/ directory with your code inside. That way, your editor will be able to find all the imports, etc. Make sure your editor uses the environment you just created with Poetry.

Modify or add SQLAlchemy models in ./backend/app/app/models/, Pydantic schemas in ./backend/app/app/schemas/, API endpoints in ./backend/app/app/api/, CRUD (Create, Read, Update, Delete) utils in ./backend/app/app/crud/. The easiest might be to copy the ones for Items (models, endpoints, and CRUD utils) and update them to your needs.

During development, you can change Docker Compose settings that will only affect the local development environment, in the file docker-compose.yml.

The changes to that file only affect the local development environment, not the production environment. So, you can add "temporary" changes that help the development workflow (add environment variable, etc...).

For example, the directory with the backend code is mounted as a Docker "host volume", mapping the code you change live to the directory inside the container. That allows you to test your changes right away, without having to build the Docker image again. It should only be done during development, for production, you should build the Docker image with a recent version of the backend code. But during development, it allows you to iterate very fast.

There is also a command override that runs /start-reload.sh (included in the base image) instead of the default /start.sh (also included in the base image). It starts a single server process (instead of multiple, as would be for production) and reloads the process whenever the code changes. Have in mind that if you have a syntax error and save the Python file, it will break and exit, and the container will stop. After that, you can restart the container by fixing the error and running again:

$ docker-compose up -dTo get inside the container with a bash session you can start the stack with:

$ docker-compose exec backend bashYou should see an output like:

root@7f2607af31c3:/app#that means that you are in a bash session inside your container, as a root user, under the /app directory.

There you can use the script /start-reload.sh to run the debug live reloading server. You can run that script from inside the container with:

$ bash /start-reload.shThat runs the live reloading server that auto reloads when it detects code changes.

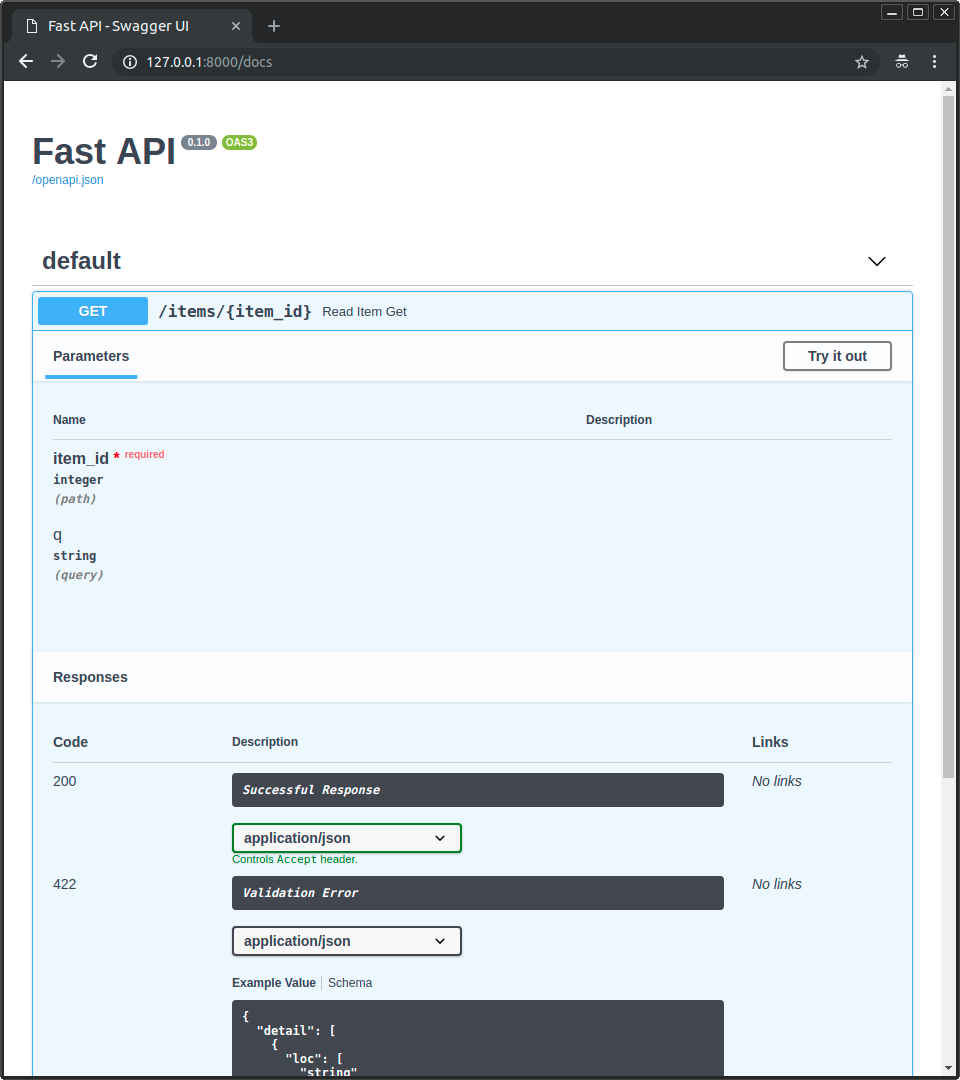

Now you can go to http://192.168.99.100/docs or http://127.0.0.1/docs (or equivalent, using your Docker host). Alternative: http://192.168.99.100/redoc

You will see the automatic interactive API documentation (provided by Swagger UI):

For development, it's useful to be able to mount the contents of the application code inside of the container as a Docker "host volume", to be able to change the code and test it live, without having to build the image every time.

In that case, it's also useful to run the server with live auto-reload, so that it re-starts automatically at every code change.

The additional script /start-reload.sh runs Uvicorn alone (without Gunicorn) and in a single process.

For example, instead of running:

docker run -d -p 80:80 myimageYou could run:

docker run -d -p 80:80 -v $(pwd):/app myimage /start-reload.sh-v $(pwd):/app: means that the directory$(pwd)should be mounted as a volume inside of the container at/app.$(pwd): runspwd("print working directory") and puts it as part of the string.

/start-reload.sh: adding something (like/start-reload.sh) at the end of the command, replaces the default "command" with this one. In this case, it replaces the default (/start.sh) with the development alternative/start-reload.sh.

As /start-reload.sh doesn't run with Gunicorn, any of the configurations you put in a gunicorn_conf.py file won't apply.

But these environment variables will work the same as described above:

MODULE_NAMEVARIABLE_NAMEAPP_MODULEHOSTPORTLOG_LEVEL

If you know about Python Jupyter Notebooks, you can take advantage of them during local development.

The docker-compose.yml file sends a variable env with a value dev to the build process of the Docker image (during local development) and the Dockerfile has steps to then install and configure Jupyter inside your Docker container.

So, you can enter into the running Docker container:

docker-compose exec backend bashAnd use the environment variable $JUPYTER to run a Jupyter Notebook with everything configured to listen on the public port (so that you can use it from your browser).

It will output something like:

root@73e0ec1f1ae6:/app# $JUPYTER

[I 12:02:09.975 NotebookApp] Writing notebook server cookie secret to /root/.local/share/jupyter/runtime/notebook_cookie_secret

[I 12:02:10.317 NotebookApp] Serving notebooks from local directory: /app

[I 12:02:10.317 NotebookApp] The Jupyter Notebook is running at:

[I 12:02:10.317 NotebookApp] http://(73e0ec1f1ae6 or 127.0.0.1):8888/?token=f20939a41524d021fbfc62b31be8ea4dd9232913476f4397

[I 12:02:10.317 NotebookApp] Use Control-C to stop this server and shut down all kernels (twice to skip confirmation).

[W 12:02:10.317 NotebookApp] No web browser found: could not locate runnable browser.

[C 12:02:10.317 NotebookApp]

Copy/paste this URL into your browser when you connect for the first time,

to login with a token:

http://localhost:8888/token=f20939a41524d021fbfc62b31be8ea4dd9232913476f4397You will have a full Jupyter Notebook running inside your container that has direct access to your database by the container name (db), etc. So, you can just run sections of your backend code directly, for example with VS Code Python Jupyter Interactive Window or Hydrogen.

To test the backend run:

$ sh ./scripts/test.shThe file ./scripts/test.sh has the commands to generate a testing docker-stack.yml file, start the stack and test it.

The tests run with Pytest, modify and add tests to ./backend/app/app/tests/.

If you use GitLab CI the tests will run automatically.

Start the stack with this command:

DOMAIN=backend sh ./scripts/test-local.shThe ./backend/app directory is mounted as a "host volume" inside the docker container (set in the file docker-compose.dev.volumes.yml).

You can rerun the test on live code:

docker-compose exec backend /app/tests-start.shIf your stack is already up and you just want to run the tests, you can use:

docker-compose exec backend /app/tests-start.shThat /app/tests-start.sh script just calls pytest after making sure that the rest of the stack is running. If you need to pass extra arguments to pytest, you can pass them to that command and they will be forwarded.

For example, to stop on first error:

docker-compose exec backend bash /app/tests-start.sh -xBecause the test scripts forward arguments to pytest, you can enable test coverage HTML report generation by passing --cov-report=html.

To run the local tests with coverage HTML reports:

sh ./scripts/test-local.sh --cov-report=htmlTo run the tests in a running stack with coverage HTML reports:

docker-compose exec backend bash /app/tests-start.sh --cov-report=html$ docker-compose -f docker-compose.prod.yml up -dThe image includes a default Gunicorn Python config file at /gunicorn_conf.py.

It uses the environment variables declared above to set all the configurations.

You can override it by including a file in:

/app/gunicorn_conf.py/app/app/gunicorn_conf.py/gunicorn_conf.py

If you need to run anything before starting the app, you can add a file prestart.sh to the directory /app. The image will automatically detect and run it before starting everything.

For example, if you want to add Alembic SQL migrations (with SQLALchemy), you could create a ./app/prestart.sh file in your code directory (that will be copied by your Dockerfile) with:

#! /usr/bin/env bash

# Let the DB start

sleep 10;

# Run migrations

alembic upgrade headand it would wait 10 seconds to give the database some time to start and then run that alembic command.

If you need to run a Python script before starting the app, you could make the /app/prestart.sh file run your Python script, with something like:

#! /usr/bin/env bash

# Run custom Python script before starting

python /app/my_custom_prestart_script.pyYou can customize the location of the prestart script with the environment variable PRE_START_PATH described above.

These are the environment variables that you can set in the container to configure it and their default values:

MODULE_NAMEVARIABLE_NAMEAPP_MODULEGUNICORN_CONFWORKERS_PER_COREMAX_WORKERSWEB_CONCURRENCYHOSTPORTBINDLOG_LEVELWORKER_CLASSTIMEOUTKEEP_ALIVEGRACEFUL_TIMEOUTACCESS_LOGERROR_LOGGUNICORN_CMD_ARGSPRE_START_PATH

The Python "module" (file) to be imported by Gunicorn, this module would contain the actual application in a variable.

By default:

app.mainif there's a file/app/app/main.pyormainif there's a file/app/main.py

For example, if your main file was at /app/custom_app/custom_main.py, you could set it like:

docker run -d -p 80:80 -e MODULE_NAME="custom_app.custom_main" myimageThe variable inside of the Python module that contains the FastAPI application.

By default:

app

For example, if your main Python file has something like:

from fastapi import FastAPI

api = FastAPI()

@api.get("/")

def read_root():

return {"Hello": "World"}In this case api would be the variable with the FastAPI application. You could set it like:

docker run -d -p 80:80 -e VARIABLE_NAME="api" myimageThe string with the Python module and the variable name passed to Gunicorn.

By default, set based on the variables MODULE_NAME and VARIABLE_NAME:

app.main:appormain:app

You can set it like:

docker run -d -p 80:80 -e APP_MODULE="custom_app.custom_main:api" myimageThe path to a Gunicorn Python configuration file.

By default:

/app/gunicorn_conf.pyif it exists/app/app/gunicorn_conf.pyif it exists/gunicorn_conf.py(the included default)

You can set it like:

docker run -d -p 80:80 -e GUNICORN_CONF="/app/custom_gunicorn_conf.py" myimageYou can use the config file from the base image as a starting point for yours.

This image will check how many CPU cores are available in the current server running your container.

It will set the number of workers to the number of CPU cores multiplied by this value.

By default:

1

You can set it like:

docker run -d -p 80:80 -e WORKERS_PER_CORE="3" myimageIf you used the value 3 in a server with 2 CPU cores, it would run 6 worker processes.

You can use floating point values too.

So, for example, if you have a big server (let's say, with 8 CPU cores) running several applications, and you have a FastAPI application that you know won't need high performance. And you don't want to waste server resources. You could make it use 0.5 workers per CPU core. For example:

docker run -d -p 80:80 -e WORKERS_PER_CORE="0.5" myimageIn a server with 8 CPU cores, this would make it start only 4 worker processes.

Note: By default, if WORKERS_PER_CORE is 1 and the server has only 1 CPU core, instead of starting 1 single worker, it will start 2. This is to avoid bad performance and blocking applications (server application) on small machines (server machine/cloud/etc). This can be overridden using WEB_CONCURRENCY.

Set the maximum number of workers to use.

You can use it to let the image compute the number of workers automatically but making sure it's limited to a maximum.

This can be useful, for example, if each worker uses a database connection and your database has a maximum limit of open connections.

By default it's not set, meaning that it's unlimited.

You can set it like:

docker run -d -p 80:80 -e MAX_WORKERS="24" myimageThis would make the image start at most 24 workers, independent of how many CPU cores are available in the server.

Override the automatic definition of number of workers.

By default:

- Set to the number of CPU cores in the current server multiplied by the environment variable

WORKERS_PER_CORE. So, in a server with 2 cores, by default it will be set to2.

You can set it like:

docker run -d -p 80:80 -e WEB_CONCURRENCY="2" myimageThis would make the image start 2 worker processes, independent of how many CPU cores are available in the server.

The "host" used by Gunicorn, the IP where Gunicorn will listen for requests.

It is the host inside of the container.

So, for example, if you set this variable to 127.0.0.1, it will only be available inside the container, not in the host running it.

It's is provided for completeness, but you probably shouldn't change it.

By default:

0.0.0.0

The port the container should listen on.

If you are running your container in a restrictive environment that forces you to use some specific port (like 8080) you can set it with this variable.

By default:

80

You can set it like:

docker run -d -p 80:8080 -e PORT="8080" myimageThe actual host and port passed to Gunicorn.

By default, set based on the variables HOST and PORT.

So, if you didn't change anything, it will be set by default to:

0.0.0.0:80

You can set it like:

docker run -d -p 80:8080 -e BIND="0.0.0.0:8080" myimageThe log level for Gunicorn.

One of:

debuginfowarningerrorcritical

By default, set to info.

If you need to squeeze more performance sacrificing logging, set it to warning, for example:

You can set it like:

docker run -d -p 80:8080 -e LOG_LEVEL="warning" myimageThe class to be used by Gunicorn for the workers.

By default, set to uvicorn.workers.UvicornWorker.

The fact that it uses Uvicorn is what allows using ASGI frameworks like FastAPI, and that is also what provides the maximum performance.

You probably shouldn't change it.

But if for some reason you need to use the alternative Uvicorn worker: uvicorn.workers.UvicornH11Worker you can set it with this environment variable.

You can set it like:

docker run -d -p 80:8080 -e WORKER_CLASS="uvicorn.workers.UvicornH11Worker" myimageWorkers silent for more than this many seconds are killed and restarted.

Read more about it in the Gunicorn docs: timeout.

By default, set to 120.

Notice that Uvicorn and ASGI frameworks like FastAPI are async, not sync. So it's probably safe to have higher timeouts than for sync workers.

You can set it like:

docker run -d -p 80:8080 -e TIMEOUT="20" myimageThe number of seconds to wait for requests on a Keep-Alive connection.

Read more about it in the Gunicorn docs: keepalive.

By default, set to 2.

You can set it like:

docker run -d -p 80:8080 -e KEEP_ALIVE="20" myimageTimeout for graceful workers restart.

Read more about it in the Gunicorn docs: graceful-timeout.

By default, set to 120.

You can set it like:

docker run -d -p 80:8080 -e GRACEFUL_TIMEOUT="20" myimageThe access log file to write to.

By default "-", which means stdout (print in the Docker logs).

If you want to disable ACCESS_LOG, set it to an empty value.

For example, you could disable it with:

docker run -d -p 80:8080 -e ACCESS_LOG= myimageThe error log file to write to.

By default "-", which means stderr (print in the Docker logs).

If you want to disable ERROR_LOG, set it to an empty value.

For example, you could disable it with:

docker run -d -p 80:8080 -e ERROR_LOG= myimageAny additional command line settings for Gunicorn can be passed in the GUNICORN_CMD_ARGS environment variable.

Read more about it in the Gunicorn docs: Settings.

These settings will have precedence over the other environment variables and any Gunicorn config file.

For example, if you have a custom TLS/SSL certificate that you want to use, you could copy them to the Docker image or mount them in the container, and set --keyfile and --certfile to the location of the files, for example:

docker run -d -p 80:8080 -e GUNICORN_CMD_ARGS="--keyfile=/secrets/key.pem --certfile=/secrets/cert.pem" -e PORT=443 myimageNote: instead of handling TLS/SSL yourself and configuring it in the container, it's recommended to use a "TLS Termination Proxy" like Traefik. You can read more about it in the FastAPI documentation about HTTPS.

The path where to find the pre-start script.

By default, set to /app/prestart.sh.

You can set it like:

docker run -d -p 80:8080 -e PRE_START_PATH="/custom/script.sh" myimageThis project is licensed under the terms of the MIT license.